Dieser Artikel ist auch auf Deutsch verfügbar

A modern web application is obviously implemented in JavaScript and will generate its HTML on the client within the browser. It only communicates with the server by retrieving data in a JSON format from an HTTP/REST API endpoint - this, it seems, is the common wisdom of today. But are server-side HTML and progressive enhancement really out of date? Quite the contrary is true - we can use these technologies to create applications which are often much better than those which we could create with the Framework of the Week for creating single page apps.

Let’s look at the problems that often occur when using single-page application (SPAs). In the traditional separation between server and client, the client is responsible for the appearance and behavior. State, buisiness logic, routing, presentation logic, and templating are found exclusively on the server (Fig. 1).

When we build an app and want to optimize it to respond more quickly and possibly provide offline capabilities, we often will move some responsibilites from the server to the client. Many companies consider it an additional benefit if we can separate these responsibilites into different departments. Backend experts then only need to provide an API but do not need to take care of any HTML/CSS/JS. Aditionally, there is a common misconception that JSON is smaller than HTML. Jon Moore explains in an article why that is not the case. Often the view logic and the templating are moved into the frontend. This is the case with technologies like React or Vue.js (Fig 2).

A further step is often to push the routing into the client. For example, this is what happens in React with React Router and in Vue.js with Vue Router. In frameworks like Angular or Ember, the client is also responsible for the routing (Fig. 3).

It may seem that the shift of responsibilities from the server to the client is only a change of boundary. However, this change in boundary has further consequences that we need to consider.

We used to only interpret HTML in the client - a functionality which the browser provides out of the box. Now we need a JSON client and an API which we can consume. This also adds a level of indirection: in the presentation logic we no longer have direct access to the business logic and the application state but must instead transform data into JSON and back again. Defining and modifying this API requires a high level of coordination and communication especially when the server and client are being developed by different teams.

If both the business logic and the application state remain completely on the server, we either have the same or more communication between client and server. If we however move a part of the business logic onto the client, we now need to continually decide which business logic belongs on the server, which business logic belongs on the client, and which needs to be duplicated in both. Here we need to pay attention to the fact that the client is not a secure or trusted environment. For this reason, we also need to verify a lot of things on the server. GraphQL is an example of a technology which attempts to use the backend as only a “database” with no business logic on the server.

The state of an application is also no longer only on the server (database/session…). We have to maintain state in the client as well. We can achieve this with solutions like LocalStorage/PouchBD and also with technologies like Redux, Flux, Reflux, and Vuex. Here we also need to decide which data belongs to the client, which data belongs to the server, and which needs to be saved in both places. We also need to think about (multi-leader) synchronization: because data can be simultaneously modified on more than one client, which could be offline for longer periods of time, there will definitely be write conflicts which need to be resolved.

The change in the boundary between client and server also means that we need to execute more JavaScript code on the client. The time that the browser needs to parse and execute JavaScript code is underestimated by many. This also means that pages with a lot of JavaScript can have a slower start time. To avoid this, many frameworks offer hydration: the application will first be rendered on the server with the help of Node and delivered to the client as HTML. When the JS is loaded, it takes over the markup and from this point on, the application is rendered on the client.

A further reason for hydration is SEO: since JS is not reliably interpreted by search engines, it makes sense to provide content as HTML. With hydration we add not only futher complexity to the client but also extra infrastructure.

Execution in an uncontrolled environment

Browsers are also a more uncontrolled execution environment than our server. We need to pay attention to this when we push more code into the browser. One point is that observability is worse. On the server we can keep logs and catch and log exceptions. We can also do this on the client, but it is much more complex and easier to make mistakes. We should also pay attention to some factors concerning performance. We often test our JavaScript on machines which are very fast. But on mobile devices the time that is needed to process JavaScript is very high. You can find details here.

Browsers are also a more uncontrolled execution environment than our server. We need to pay attention to this when we push more code into the browser.

JavaScript is executed in a single thread. Only I/O operations are executed asynchronously outside of this thread. A CPU-task blocks the thread. CPU intensive tasks therefore cause the page to become unresponsive. If we execute a lot of business logic, we thereby block the thread and the page feels frozen. Today we can execute code on a separate thread with the help of Web Workers. However, this isn’t supported on older browsers which further decreases the performance on older, slower devices.

Additionally, the uncontrollable execution environment also has certain repercussions. On the server we can choose a version of our programming language (e.g. JDK 7). However, there are an unbelievable amount of web browsers in many different versions. Even the newest version of a browser does not support all of the specified features. The complexity of testing an application is therefore much higher.

In summary, shifting routing, templates and presentation logic onto the client increases the responsibility that it has. Additionally, more infrastructure, coordination, duplication, and indirection are necessay and we transport code from a controlled execution environment into an uncontrolled execution environment.

An alternative architecture

Let’t take a step back and compare the architecture from the previous section with a classic GUI-Application which we would develop with .NET, Swing, or Eclipse RCP. We will realize that the differences are pretty small. This seems very attractive for some teams, especially when they have not yet developed any web applications. Experience with a 3-layer-architecture can be transfered onto the web. The execution environment is no longer Windows, with the CLR or the JVM, but the JavaScript runtime environment in the browser.

Unfortunately, despite its popularity, this approach ignores the actual strength of the web which has been shaped by declarative languages and which therefore has an extremely error-tolerant architecture. A change in technology can illustrate this: the browser is a highly performant graphics engine and is the most optimized application on practically all platforms. It is implemented in C, C++ or other low-level languages like Rust and exploits the hardware acceleration from the underlying systems as much as possible. We can use these capabilities by accessing them via API from JavaScript. Alternatively, we can use the declarative languages HTML and CSS which were developed especially for this environment. The low-level code of the browser can parse, interpret and transform them into the document object model (DOM) for rendering faster than anything else.

Because these languages were created exactly for this purpose, their performance is optimal. Additionally, they are declarative and especially error-tolerant in the adverse execution environment of the browser that is described above. If there is an error in JavaScript code, an exception is thrown and the rest of the code will not be executed. CSS, on the other hand, skips over unknown rules, and HTML treats unknown tags as <span> elements. Their declarative nature also makes it possible to ensure compatibility between browser versions to a great extent. This is how server-side rendered HTML and CSS pages can be interpreted by older and newer browsers, and even devices which did not exist at the time of the creation of the code can usually interpret them to the extent that they are usable.

Those who write server-side code can consider HTML as a component configuration: it is possible to use existing components such as text and input fields, select elements (lists), and video and auto elements without implementing them again. Naturally, JavaScript is also used, but is no longer a necessary ingredient for a working page and instead is an additional element which can improve the ergonomics or the look of the page.

We recommend this approach of relying on the basic features of the web and using these three key technologies based on these core principles. For this reason, we have created a website with best practices and given the approach a name: ROCA (ROCA stands for Resource Oriented Client Architecture).

How can I apply this practially?

Up to now we have talked about the abstract architecture, but what does this look like in practice? Can we really imagine a web application without JavaScript? We have maybe become used to retrieving some JSON structure from the server and using it to generate DOM elements. But JSON is not the language of the web. HTML is the language of the web. Why do we need to take an additional step so that a JavaScript framework can create HTML from our JSON? Almost every backend framework includes a templating engine with which we can directly generate HTML and send it to the client. For instance, the default Spring-Boot application comes bundled with Thymeleaf, and we can use it to directly deliver HTML pages without using any kind of JavaScript framework.

Make it work: HTML

If we want to develop an application without JavaScript, the first step is to create a web application which consists of only HTML. HTML5 includes quite a few elements with a lot of functionality. Three examples of these are:

-

<input type="date">creates a datepicker. -

<audio>creates an player for audio files -

<video>creates a player for video files.

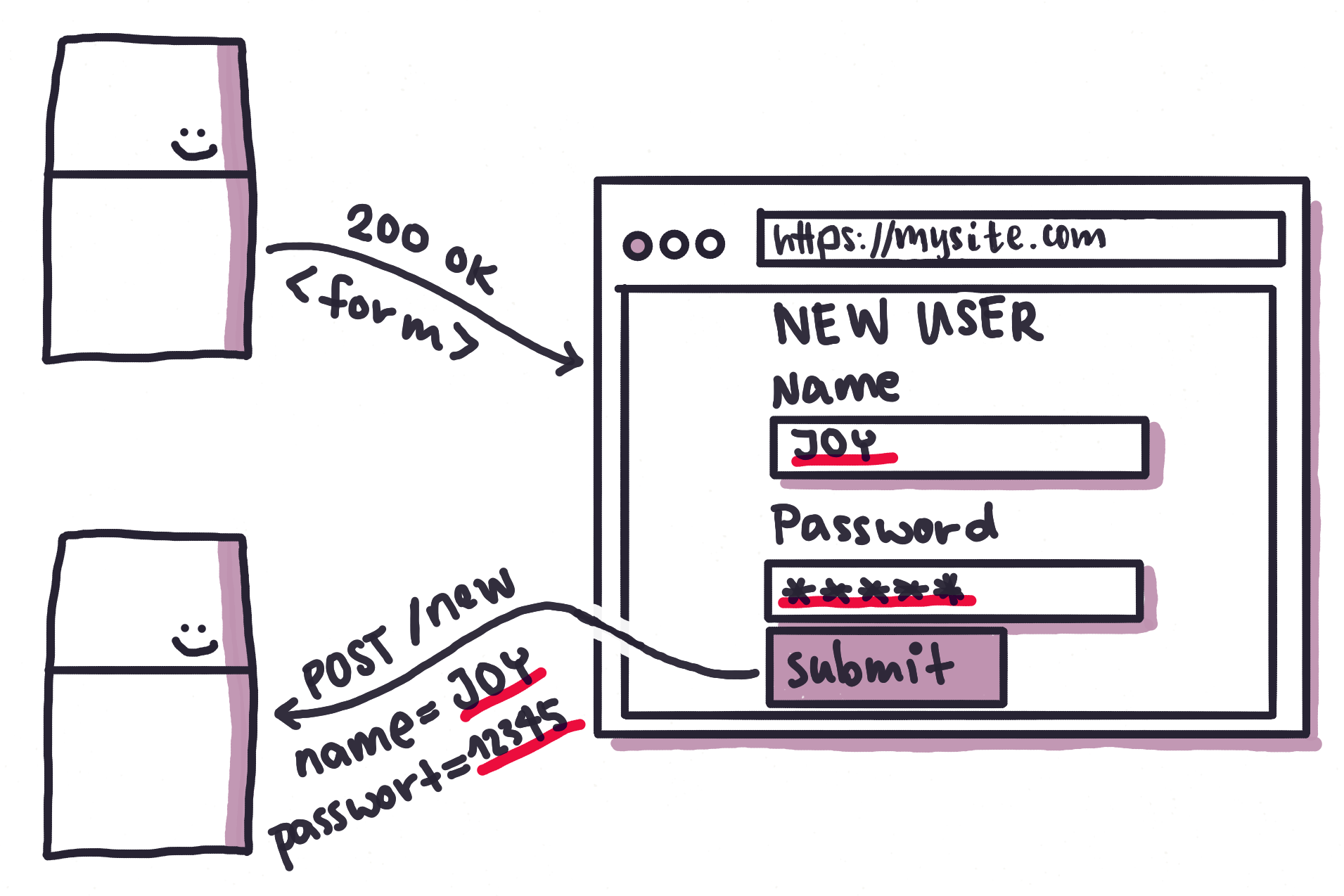

Additionally, there is always a fallback for these elments so that older browsers can still continue to work. Often, the browser can make better decisions about how to render these elements than we can. An <input type="date"> on a smarthone is optimized so that users can select a date with their finger, and it is easier to use this datepicker than the datepicker from a JavaScript Library for this reason. We also should not forget that HTML is not only intended for presentation logic. With a simple link we can jump from one page to another. HTML forms are also extremely powerful and allow us to create dynamic HTTP requests based on user input that are then sent to the server. The server then executes the change that is requested by the user (Fig. 4).

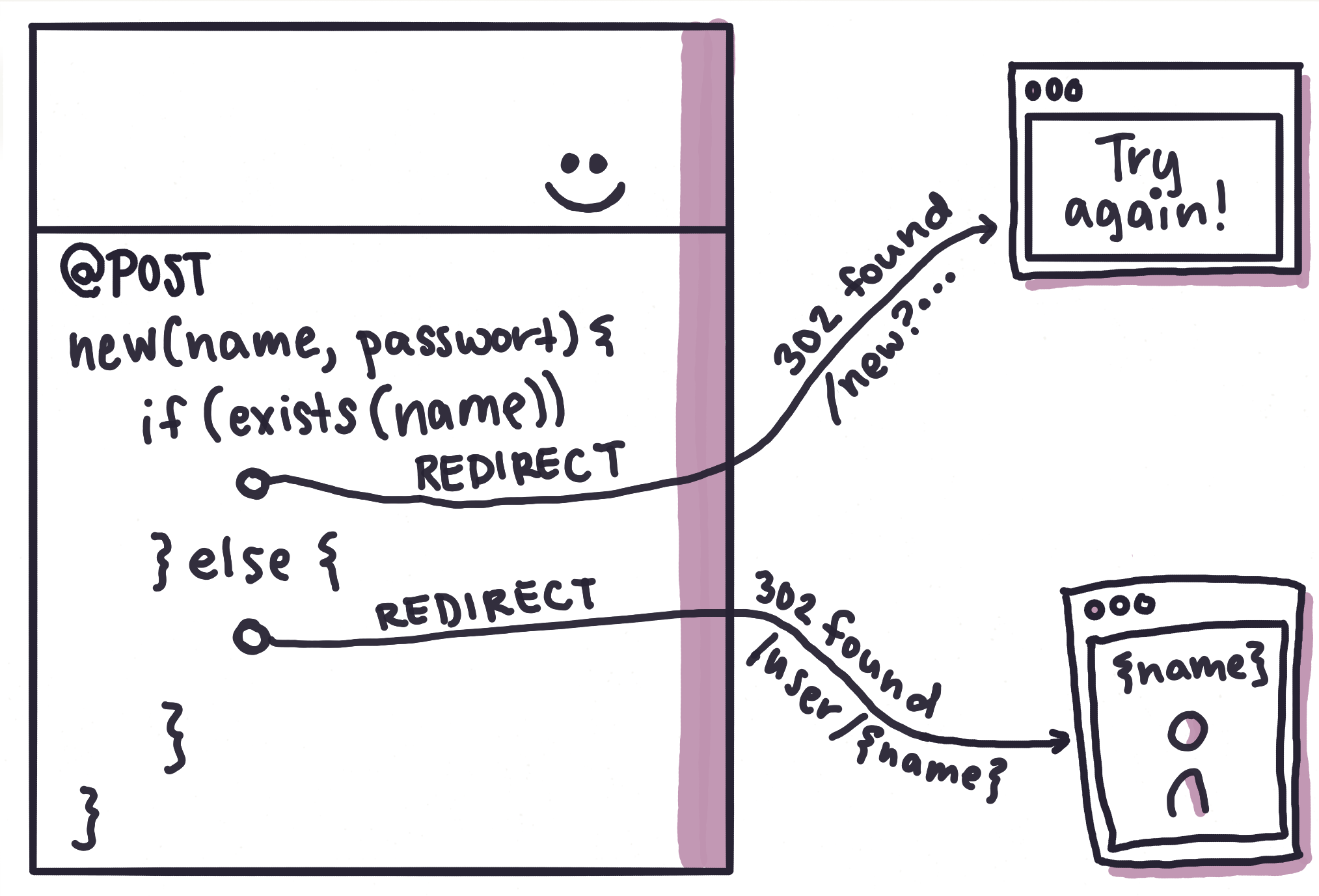

Sometimes we also want to show a different page based on user input. Since the server is now controlling the functionality in the application, it can decide where to go next with a redirect (Fig. 5).

One of the main benefits of this approach is that HTML can be easily consumed by screen readers. This makes it relatively easy to implement accessibility in the application. The A11Y Project is a good resource for further improvements. Of course, it is also possible to create a JavaScript-centric application which is accessible, but it requires greater effort and a lot of focus.

Reusability

Another benefit of HTML is that we can arbitrarily nest elements. In order to make our UI elements easier to reuse, we can extract some element into components. Components are a reusable HTML structure which we can use as an abstraction for our functionality.

To maintain an overview of our components, we can structure them in a system like Atomic Design. You can find more about them in an article by Ute Mayer on page 58. Here larger components always contain smaller components. The easiest way to practically apply this is to define an HTML snippet and to copy the markup and switch out the content in the component. However, this isn’t easy to maintain over time. Most templating engines provide a way to define an HTML snippet once and make it reusable. In Thymeleaf we can do this with th:replace and th:insert.

One of the main benefits of this approach is also that we can style our components based on this HTML structure. This is where the larger challenges begin.

Make it pretty: CSS

Now we come to the point where we may be likely to fail. On the one hand, we could argue that it is most important that the application works even if it doesn’t look very nice. On the other hand, no one wants to use an application which is ugly. In this context, certain aesthetics are important.

The look and feel of an application play an important role in addition to the functionality of an application. These four points can be used as a checklist:

- Color and color contrast

- Typography

- Spacing between rows and images

- Icons, Images, and Diagrams

Although “beauty” is subjective, we can invest a lot of time in topics like design or typography in order to develop a feeling for how things should appear in a finshed product. Unfortunately, many developers do not have the necessary time or desire to really get into the topic.

Luckily, we don’t always have to reinvent the wheel. It is possible to use finished component libraries like Bootstrap or Foundation. Here the designers have already made the most important decisions so that the application is usable for end users.

For those who want to modify a component library and extend it with additional components, a pattern library toolkit like Fractal is very useful. It is especially helpful when components should be reused between projects. For larger CSS projects we recommend using a preprocessor like Sass so that CSS can be split into multiple files. Then the CSS for a specific HTML component can be placed directly beside the component in the same folder. Here we can use naming convention like BEM for the CSS components. An asset pipeline like faucet-pipeline can be used to compile the CSS. When we also are writing JavaScript for our application we can use faucet for that as well.

Make it fast: JavaScript

We have reached a point where we can stop: we have a working web application that looks pretty. However, we can now optimize and improve our application with JavaScript. The reason why an SPA often feels faster is that the browser does not have to parse and evaulate the CSS and JavaScript with every page transition. With a library like Turbolinks we can achieve exactly this: when a user clicks on a link, the HTML on the page is replaced without having to reload the CSS and JavaScript.

With HTML forms we can achieve something similar: instead of sending the browser the content of a form and retrieving the result as a new page, we can submit the form via Ajax and work with the HTML which is returned and replace only parts of the page.

It is not necessary to build a single page app in order to avoid reparsing CSS and JavaScript with every page load.

In order to bring the content from several pages together in the browser, we can transclude links - this means that a link is replaced by its content. To do this, some generic JavaScript code can “follow” the link - e.g. use Ajax to send the request to the server - and incorporate the result directly in the page.

This is especially attractive because in all of these three cases the effect is similar to that of a single page app and page transitions work fluently. However, the page also works when JavaScript is not there, is buggy, has not yet loaded or for some other reason cannot be executed.

For the JavaScript improvements in our application, we can now use custom elements to define our own HTMl elements in the browser:

class MyComponent extends HTMLElement {

connectedCallback() {

// instantiate component

}

}

customElements.define("my-component", MyComponent)The benefit here is that the logic that is defined in the connectedCallback function will always be executed by the browser when a <my-component> element is created in the HTML DOM. This also works together with Turbolinks or transclusion because the browser takes care of the instantiation of the JavaScript as soon as the element appears in the DOM.

SPA vs. ROCA

We have experienced in practice that when we develop applications with server-sided rendered HTML and custom elements, carefully modularized CSS and a small amount of JavaScript we can create architecturally clean, lean, fast, and ergonomic applications. We do not fear the comparison of these applications with those created with popular SPA approaches - in many aspects they are often superior. We find that they have not only a lower communication overhead, a higher fault tolerance, and are more easily accessible, but also are easier to maintain in the long run. We are dependent on the browser and its standards instead of being dependent on an SPA framework which could change completely in the next version or could be replaced by the next alternative framework. For this reason, we recommend investing your limited time for learning new technologies into learning web standards like HTML, CSS, and JavaScript instead of a specific SPA ecosystem. In our experience, those who have built an application using the ROCA style tend to afterwards only choose an SPA approach as an exception and also only for a good reason, e.g. if offline capability is a requirement.