In my previous post, I described how to setup logging with docker and the Elasticstack. In this article, I will refer to the Elastic Stack (Elasticsearch and Kibana), but other tools work similarly.

With Elasticsearch in place, let’s discuss how to make better log statements to benefit from the searchable log aggregator. The examples are based on a Spring Boot application using the default logging framework Logback with SLF4J.

First, we enable JSON logging in Spring Boot. Then, we discuss how to write log statements to benefit from the structured log format.

Enable JSON logging

When using log aggregation and log indexing tools such as Elastic Stack, Splunk, or Graylog, it is best practice to generate logs in a structured format. Today, that format is JSON.

So, instead of (or additionally to) logging as traditional console output

22:53:03.689 [main] INFO MyApplication - Order 123 savedthe log output is generated as a single line JSON:

{"@timestamp":"2019-04-16T22:53:03.689+02:00","@version":"1","message":"Order 123 saved","logger_name":"MyApplication","thread_name":"main","level":"INFO","level_value":20000}For readability, all JSON examples will be rendered in a pretty-printed form in this article:

{

"@timestamp": "2019-04-16T22:53:03.689+02:00",

"@version": "1",

"message": "Order 123 saved",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000

}The structured key-value format enables log collectors (such as filebeat, fluentd) to efficiently ship the data to Elasticsearch. There is no need to parse and extract data with tools like Grok, which error-prone to extract the correkt information, especially for multiline entries.

In Spring Boot, the default log implementation is Logback with SLF4J. JSON output is enabled through the Logstash JSON Encoder, which needs to be added as an additional dependency:

<!-- pom.xml -->

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>5.2</version>

</dependency>And enabled with an entry in the logback.xml configuration:

<!-- logback.xml -->

<configuration>

<appender name="jsonConsoleAppender" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="INFO">

<appender-ref ref="jsonConsoleAppender"/>

</root>

</configuration>Tip: For unit tests, it is helpful to stay with the more compact traditional console output. This can be configured through Spring Profiles:

<!-- logback-spring.xml -->

<configuration>

<springProfile name="default">

<include resource="org/springframework/boot/logging/logback/base.xml"/>

</springProfile>

<springProfile name="prod">

<appender name="jsonConsoleAppender" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="INFO">

<appender-ref ref="jsonConsoleAppender"/>

</root>

</springProfile>

</configuration>Structured Log Statements

OK, since JSON output is active now, let’s discuss how to write better log entries.

A traditional log statement often looks like this:

log.info("Order {} saved", 123);which produces

{

"@timestamp": "2019-04-17T18:02:44.979+02:00",

"@version": "1",

"message": "Order 123 saved",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000

}One problem with this log statement is that you cannot search for all “Order saved” entries, as the orderId is part of the message in between.

So let’s optimize it to:

log.info("Order saved orderId={}", 123);which produces

{

"@timestamp": "2019-04-17T18:07:42.123+02:00",

"@version": "1",

"message": "Order saved orderId=123",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000

}Now we can use Kibana to issue a search for all Order saved events or for orderId=123 to get all log statements for this orderId. However, this requires a full text search on the message field. It would be nice to have the orderId indexed in Elasticsearch as a separate field.

MDC

The traditional way is to use the Mapped Diagnostic Context (MDC), provided by all major logging frameworks:

String orderId = "123";

MDC.put("orderId", orderId);

log.info("Order saved");

MDC.remove("orderId");or using try-with-resources:

String orderId = "123";

try (var ignored = MDC.putCloseable("orderId", orderId)) {

log.info("Order saved");

}which both produce

{

"@timestamp": "2019-04-17T18:12:16.861+02:00",

"@version": "1",

"message": "Order saved",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000,

"orderId": "123"

}The LogstashEncoder writes all MDC entries to a separate JSON field. To show MDC entries in the classic console output, the appender layout pattern must be extended.

The MDC uses a ThreadLocal, so it is important to clear it after exiting the thread. The MDC is handy, it is complicated for multi-threaded applications and it does not work with reactive implementations out of the box, such as Spring WebFlux and Netty as requests are processed by multiple threads. See a workaround.

Structured Arguments

The logstash-logback-encoder library has the concept of event specific custom fields and brings Markers to add additional fields to the generated JSON output and StructuredArguments use these markers to add data to the JSON output and to the actual log message.

As markers have a terrible API, I highly recommend to use StructuredArguments. The normal {}-placeholders can be used, just wrap the data in a StructuredArguments.value() or StructuredArguments.keyValue() factory method:

import static net.logstash.logback.argument.StructuredArguments.keyValue;

String orderId = "123";

log.info("Order saved {}", keyValue("orderId", orderId));which produces

{

"@timestamp": "2019-04-17T18:27:01.099+02:00",

"@version": "1",

"message": "Order saved orderId=123",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000,

"orderId": "123"

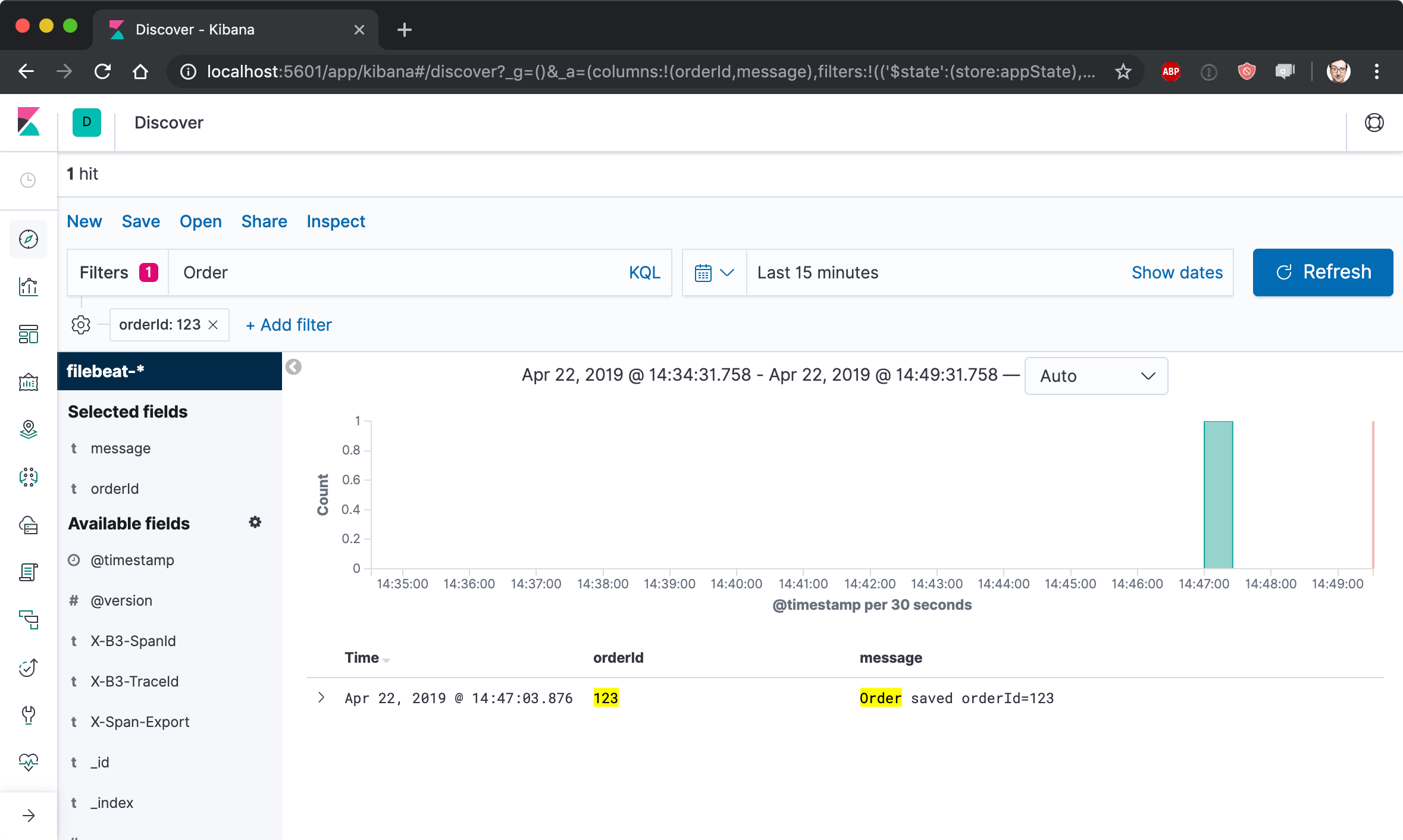

}The orderId is both added to the message (through the {}-placeholder) and to the JSON output as a separate field. This can be searched and displayed in Kibana:

Also, multiple fields are supported, it is optional to use them in the message, and there is a shorter kv alias to use:

import static net.logstash.logback.argument.StructuredArguments.kv;

String orderId = "123";

String status = "NEW";

log.info("Order saved", kv("orderId", orderId), kv("status", status));which produces

{

"@timestamp": "2019-04-17T18:29:14.079+02:00",

"@version": "1",

"message": "Order saved",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000,

"orderId": "123",

"status": "NEW"

}The value() or v() method does not write the key name to the message, while the field entry stays the same.

import static net.logstash.logback.argument.StructuredArguments.v;

String oldStatus = "NEW";

String newStatus = "READY";

log.info("Status changed {}->{}.", v("oldStatus", oldStatus), v("newStatus", newStatus));which produces

{

"@timestamp": "2019-04-17T18:27:01.099+02:00",

"@version": "1",

"message": "Status changed NEW->READY.",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000,

"oldStatus": "NEW",

"newStatus": "READY",

}And there is a nice feature to log complex objects:

Order order = new Order("123", "NEW", null);

log.info("Order saved", kv("order", order));

static class Order {

String orderId;

String status;

String canceled;

Order(String orderId, String status, String canceled) {

this.orderId = orderId;

this.status = status;

this.canceled = canceled;

}

String getOrderId() {

return orderId;

}

String getStatus() {

return status;

}

String getCanceled() {

return canceled;

}

}which produces a JSON representation of the object:

{

"@timestamp": "2019-04-17T18:34:10.437+02:00",

"@version": "1",

"message": "Order saved",

"logger_name": "MyApplication",

"thread_name": "main",

"level": "INFO",

"level_value": 20000,

"order": {

"orderId": "123",

"status": "NEW",

"canceled": null

}

}Summary

Always use JSON log output for complex applications and collect them to a log aggregator such as Elasticsearch.

Structured Arguments makes it easy to add additional data to the index with Spring Boot’s default logging framework Logback.

The main downside of using Structured Arguments is the compile dependency to logstash-logback-encoder (which is required for JSON output anyway as runtime dependency).

Structured log statements make it much easier to find related logs with analyzing tools, such as Kibana.

View code examples on Github.

Header Photo by Jan Antonin Kolar on Unsplash.