Installing Visual Studio 2008 on Windows Server 2008

24.03.08 | Comments (1) | TrackBack (0)

Today I’ve had a hard time installing Visual Studio 2008 on Windows Server 2008. I finally wanted to test WCF hosting within WAS.

The installation broke several times with Error 1330:

"… d:\cab11.cab has an invalid digital signature …"

I tried copying the installation files to HDD, another installation media, mounting the ISO image - all to no avail.

Finally I found a blog post on the issue by Heath Stewart. Within the comments to his post he recommends to

check your performance settings, to see if the failed machines are set for Background Service Optimization? Also, are these Win2K3 machines or what platform are they?

On Vista: "Start"->Right click on Computer, select Properties. On the left, select "Advanced System Properties", click "Settings" under "Performance", then click the "Advanced" tab. Is "Programs" or "Background services" checked.

I switched the performance settings to "Programs" and also defragmented the target disk with the build-in defragmenter. Afterwards the installation ran perfectly fine.

List of WCF Resources

21.03.08 | TrackBack (0)

Joe Stagner provides an extensive list of videos and labs for WCF:

So, here you go! Sixty Five Videos and Virtual Labs to make you a WCF Expert !

If you’re interested in the WCF Web Programming Model (RESTful WCF), you might take a look at Steve Maine’s WCF Web Programming Model Documentation.

New LINQ to XSD release

24.02.08

It has been a long time since the last preview of LINQ to XSD, which targeted Beta1 of Visual Studio 2008. Now Microsoft’s XML team is announcing a new release that works with Visual Studio 2008 RTM.

If you’re a VB developer LINQ to XSD won’t mean anything to you. C# developers will now be able to work with LINQ to XML in a type-safe manner:

The LINQ to XSD preview illustrates our initial thinking on a strongly-typed programming experience over LINQ to XML. Instead of working with untyped XML trees, LINQ to XSD allows you to program in terms of strongly-typed classes, generated based on an XSD schema.

Although LINQ to XSD fits into MS’s strongly typed thinking, you’ll loose the benefits of untyped XML trees, such as a uniform interface for accessing XML.

REST & SOAP/WS-* - two different views

06.02.08

For a very long time I’ve been promoting the SOAP/WS-* way of developing web services. After many discussions, articles and posts on the REST/SOAP war and some experimenting on my own I finally tend to prefer the REST way. The main reason for doing so are the advantages of loosely coupling services when going the REST way.

The most important difference when designing RESTful and SOAP/WS-* web services is their view on models and processes. In my opinion REST demands a complete new way of designing services. Stefan provides a nice example of RESTful design on InfoQ.

Ganesh Prasad delves in the differences (or similarities) by setting up a Namespace-Time continuum, which should unify both approaches. Mark Baker responds and Ganesh updates his view.

Mark argues that “WS-* fails to separate interface from implementation, while REST does”. He provides an example task of changing the implementation of an interface and asks the question: “if I change the implementation of a component from a stock quote service to a weather report, does the interface have to change? If yes, then prima facie you haven’t decoupled interface from implementation, have you?”.

Ganesh responds:

Now, as an architect, I am rather sensitive to issues of tight coupling, and have often railed against examples of this, such as the SOAP-RPC style itself and the generation of WSDL files from Java implementation classes. But Mark goes much further. He would like to change the implementation of a service from a Stock Quote service to a Weather Report, and he would like to see his interface unchanged! To my mind, this goes beyond the reasonable.

I especially like and agree with his statement that Mark’s change is like “changing from pasta to soup, and expecting to continue using a fork”. It appears to me that both are talking about the same thing from a different perspective. Mark refers to the “technical” generic interface provided by REST only, whereas Ganesh concerns himself with the service design.

The RESTful approach demands a mapping of service design logic to a combination of HTTP/REST verb and URI. Every combination has to be interpreted in order to get its meaning. In most cases the meaning is obvious, in some it isn’t. Thus the RESTful service design has to put a strong emphasis on a very slick choice of meaningful URIs, especially when setting aside a service definition, such as WADL.

Visual Studio 2008 Tip: Resolving Namespaces and Removing Unused Using Statements

29.01.08 | Comments (1)

For quite some time I’ve been wondering why Visual Studio still doesn’t meet the minimal requirements of development productivity. Features such as resolving namespaces, finding classes and automatically resolving namespaces and navigating to the definition of a class by simply pressing STRG and clicking the left mouse button, which are offered by about every JAVA IDE, are missing. Instead Visual Studio 2008 presents #1001 of the most annoying wizards ever.

ReSharper comes to the rescue, but it doesn’t support C# 3.0, yet. David Hayden shows how to get along with Visual Studio 2008 regarding namespaces:

It turns out, Visual Studio 2008 actually has good support for resolving namespaces and optmizing using statements that can get you the functionality if you are not using ReSharper.

[…]

Pressing Ctrl + . will bring up a context-sensitive menu that allows you to add a using statement or optionally fully qualify the path to the class.

[…]

The other nice thing that ReSharper does is remove unused using statements using Ctrl+Alt+O.

We can get that using Visual Studio 2008, because you may have noticed the cool context-sensitive Organize Usings Option:

[…]

I’ve quit hoping for something like “Call Hierarchy” within Visual Studio or at least ReSharper, but David’s tip saved me from uninstalling VS 2008.

DSLs, XML, and Fluent Interfaces

04.12.07

Ayende writes about the right time for a DSL.

Concerning XML vs. DSL:

If you need to do things externally, a DSL is the place to look for. In fact, if you feel the urge to put XML into place, as anything except strict data storage mechanism, that is a place to go with a DSL.

DSL vs. Fluent Interface:

Fluent Interfaces relies heavily on intellisense in order to create that fluent feeling. If you need to work on it outside the IDE, that is probably going to be a factor.

Extensibility is also a concern; let us go back to the scheduling sample. We have the scheduling engine, and we have the tasks themselves. Consider that to write a task using a DSL I usually have to write a small text file, but to write a task using a Fluent Interface requires creating a project, compiling, etc.

I totally agree with Ayende. A DSL is the perfect means for supporting external aspects of a software development project, especially when non-programmers are involved.

Larry Lessig: How creativity is being strangled by the law

11.11.07 | Comments (1)

via Ayende @ Rahien:

Although the content of Larry’s talk is well worth watching it

He pins down the key shortcomings of our dusty, pre-digital intellectual property laws, and reveals how bad laws beget bad code. Then, in an homage to cutting-edge artistry, he throws in some of the most hilarious remixes you’ve ever seen.

it is the show that impressed me most.

Larry uses a style (slides & talk), which most of you might already know from the Identity 2.0 talk by Dick Hardt. What sets them apart (at least from my point of view) is that Dick is overdoing it (a bit) and Larry seems to have found the right doses (for me).

Anyhow, the show is a must-see!

Visual Studio 2008 will be released at the end of this month

07.11.07

S. Somasegar, Vice President of the Developer Division at Microsoft, announced on his blog, that Visual Studio 2008 and the .NET Framework 3.5 will be released at the end of this month. The official marketing launch, which will include Windows Server 2008 and SQL Server 2008, is still scheduled for February 2008.

Great news. Get ready!

WCF v2 Features in .NET Framework 3.5

02.11.07

Christian Weyer has published some entries about the new WCF features of .NET 3.5.

In his first post he give a rough overview of the popular and not so popular new features. One of these features is the new Web Programming Model. Although support for RESTful Services is an important feature for the next version of the .NET Framework, I’m wondering, whether a slick light-weight solution (such as Mindtouch Dream), which remains true to the principles and simplicity of REST, isn’t much better suited than an all-in-one silver-bucket attempt.

Dominick Baier joins in and writes about Usernames over Transport Authentication in WCF.

In his most recent blog post Christian publishes a list of all updated/new WS-* specs in WCF v2.

Improved URL Mapping for Castle MonoRail

23.10.07 | Comments (2)

Hammett announced a new MonoRail Routing Engine on his blog. The Routing Engine is responsible for mapping URL patterns to Controller actions, which has been a weakness of MonoRail so far. As Hammett stated on his blog, the need for an improvement has mainly been triggered by the announcement (and features) of the ASP.NET MVC Framework.

The basic idea is to map (friendly) URLs to the actions of your controllers:

Suppose you want to offer listing of say, cars. You want that the urls show the data that is being queries on the resource identifier, not through the query string (have you read about REST?).

Something like:

www.cardealer.com/listings/ -> can list all or show a search page, up to you

www.cardealer.com/listings/new/ -> shows a nationwide list of new cars

www.cardealer.com/listings/old/ -> shows a nationwide list of second hand cars

www.cardealer.com/listings/new/ford -> new ford cars

www.cardealer.com/listings/new/toyota -> new toyota cars

In order to allow for friendly URLs you’ll have to remove all script mappings from your web site and route all requests to the ASP.NET ISAPI extension. Many people don’t like the idea, stating that “no one would do or want to do such a thing”. What’s the problem with this approach? I’ve been developing web applications for several years (as part of enterprise applications) and I’ve never experienced the need to serve static content. What’s the use of databases then? However, if you want to serve static content as well, you might follow David Moore’s suggestion to “have the web site and a separate web site to handle static content (images/css/js)”.

The Routing Engine is configured in the Application Start event:

RoutingModuleEx.Engine.Add(

PatternRule.Build(“bycondition”, “listings/<cond:new|old>”, typeof(SearchController), “View”));

This piece of code tells the engine to route URL-requests such as “/listings/new” or “listings/old” to the SearchController’s View action/method, which expects a parameter called “cond”. The parameter values might be “new” or “old”. The StandardUrlRules utility class allows to easily define generic patterns for all actions/methods of a controller.

The code is available from the MonoRail SVN repository.

ASP.NET MVC Framework

08.10.07

[Update]: Scott Hanselman has posted the videos of the ASP.NET MVC Framework talks.

Scott Guthrie announces ASP.NET MVC Framework at ALT.NET conference. There have been rumors about an MVC Framework for ASP.NET in March 2007, already. Jeffrey Palermo wrote about a special meeting with Scott Guthrie on the MVP Summit. Now Scott officially announces the framework and claims that we might expect a first CTP within the next two months and a V1 in Spring 2008. Jeffrey has the details.

He names some of the goals:

- Natively support TDD model for controllers.

- Provide ASPX (without viewstate or postbacks) as a view engine

- Provide a hook for other view engines from MonoRail, etc.

- Support IoC containers for controller creation and DI on the controllers

- Provide complete control over URLs and navigation

- Be pluggable throughout

- Separation of concerns

- Integrate nicely within ASP.NET

- Support static as well as dynamic languages

Nima Dilmaghani provides further details on Scott’s talk and the integration points for existing ASP.NET technology and other frameworks such as Castle’s MonoRail and Windsor. Even Roy Osherove praises the new MVP Framework for ASP.NET:

My take away - finally they get it. I wish there were more guthries out there in the b0rg.

Regarding the impact on MonoRail Jeffrey says:

MonoRail is MVC. This is MVC, so yes, it’s very similar but different. This gives us a controller that executes before a view ever comes into play, and it simplifies ASPX as a view engine by getting rid of viewstate and server-side postbacks with the event lifecycle. That’s about it. MonoRail is much more. MonoRail has tight integration with Windsor, ActiveRecord and several view engines. MonoRail is more than just the MVC part. I wouldn’t be surprised if MonoRail were refactored to take advantage of the ASP.NET MVC HttpHandler just as a means to reduce the codebase a bit. I think it would be a very easy move, and it would probably encourage MonoRail adoption (even beyond its current popularity).

The Castle PMC has a similar take on the issue:

We also believe that MonoRail has been providing the same thing for the past two and half years, and will continue to do so. We’re grateful that MS has chosen to offer integration points for Monorail and the Castle stack and as soon as it’s available we will be working to integrate it with the rest of our projects.

Is MS’ MVC better? Worse? Only once we have used both will we be able to tell.

I would like to agree with Jeffrey that this might encourage MonoRail adoption. At least both frameworks would share some common concepts and the programming models won’t differ that much anymore. But common sense tells me that MSFT might try to do business as usual, i.e. make a (bad) copy of a good concept and get rid of the competing framework/tool along the way. I’ll have to put my faith in Scott ;-) (who’s proven to deserve as much several times).

Microsoft Releases Source Code for the .NET Framework

03.10.07

Scott Guthrie announces today that Microsoft will offer “the ability for .NET developers to download and browse the source code of the .NET Framework libraries, and to easily enable debugging support in them” later this year.

According to Daniel Moth

The cool bit is not that you can just read the framework code in your favourite text editor once you download and accept the license; no, the real goodness is that when you debug your applications with Visual Studio 2008 you will have the option to debug right down into the Framework code (with an autodownload feature from an MSDN server)!

Scott Hanselman has a Podcast on the topic and Channel9 will be publishing a video at the end of the week.

Duck Typing in C#

27.09.07

I stumbled upon David Meyer’s Duck Typing Project while searching for infos about the DLR:

The duck typing library is a .NET class library written in C# that enables duck typing. Duck typing is a principle of dynamic typing in which an object’s current set of methods and properties determines the valid semantics, rather than its inheritance from a particular class. (For a detailed explanation, see the Wikipedia article.)

Beyond simple duck typing, this library has evolved to include different forms of variance in class members and other advanced features.

Very cool: “Although there is a performance hit when a new proxy type is generated, use of the casted object is virtually as fast to call as the object itself. Reflection.Emit is used to emit IL that directly calls the original object.”

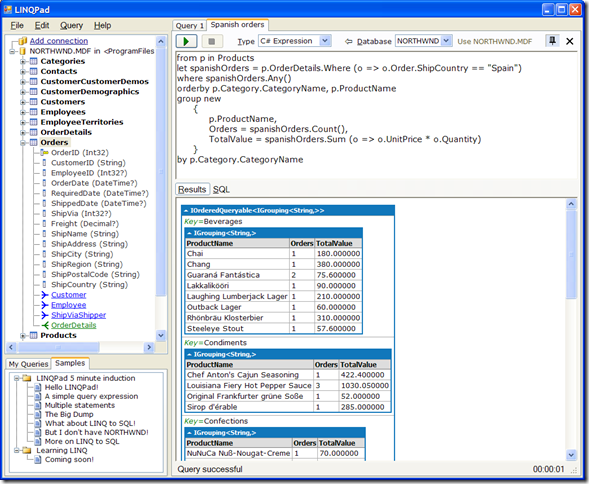

Issue Dynamic LINQ Queries to SQL Databases with LINQPad

27.09.07

Can’t wait for C# 3.0 and LINQ? Well you don’t have to! Dynamically query SQL databases today in LINQ: no more struggling with antiquated SQL. Download LINQPad and kiss goodbye to SQL Management Studio: LINQPad supports LINQ to objects, LINQ to SQL and LINQ to XML—in fact, everything in C# 3.0 and .NET Framework 3.5. LINQPad is also a terrific learning tool for experimenting with this exciting new technology.

Joseph Albahari, co-author of C# 3.0 in a Nutshell, has published LINQPad (Beta) on his website. LINQPad requires .NET Framework 3.5 Beta 2 and allows to issue dynamic LINQ queries to SQL Server databases. It’s a great way to get acquainted with LINQ and to get to know its workings. Got, get it!

Astoria Updated for Visual Studio Beta 2

24.09.07

Project Astoria has been updated to run with Visual Studio Beta 2. Nicholas Allen has the details.

MSBuild vs. NAnt

24.09.07

Tomas Restrepo has posted a nice comparison of MSBuild and NAnt. Although I’ve been using MSBuild for a long time, I’m thinking of giving NAnt a try. MSBuild is missing some useful tasks, especially concerning deployment of web applications and configuring IIS. Community tasks are available, but they are very often lacking properties in order to customize the tasks to my requirements/likings:

My recent efforts of migrating a web application from WebForms to MonoRail might have influenced my decision to have a look at NAnt (Castle is build by an NAnt script).

Castle RC3 has been released

24.09.07

It’s been a long time, but finally Castle Project Release Candidate 3 has been released. Hammett has published the list of changes and the current release can be downloaded here.

Castle is an open source project for .net that aspires to simplify the development of enterprise and web applications. Offering a set of tools (working together or independently) and integration with others open source projects, Castle helps you get more done with less code and in less time.

The main components of Castle are:

- MonoRail - an MVC web framework inspired by ActionPack (Ruby on Rails)

- ActiveRecord - an ORM based on NHibernate

- Windsor - an inversion of control container

You might also download the current development bits from the Subversion Repository. An NAnt build scipt is included.

Know your LINQ

10.09.07 | Comments (1)

Ian Griffith writes about the problems he ran into while using LINQ for real. In LINQ to SQL, Aggregates, EntitySet, and Quantum Mechanics he starts with Aggregates and null values:

The first issue is that an aggregate might return null. How would that happen? One way is if your query finds no rows. Here’s an example: […]

decimal maxPrice = ctx.Products. Where(product => product.Color == "Puce").

Max(product => product.ListPrice);

This code fragment will throw an InvalidOperationException due to the assignment of a null value to a decimal. The problem lies in the signature of the Max extension method, which return a TResult instead of a TResult? (nullable return type). This is by design, because TResult wouldn’t allow to use reference types in your lambdas. Ian provides a rather simple solution to the problem:

If we want

Maxto return us a nullable type, we need to make sure the selector we provide returns one. That’s fairly straightforward:decimal? maxPrice = ctx.Products. Where(product => product.Color == "Puce"). Max(product => (decimal?) product.ListPrice);We’re forcing the lambda passed to

Maxto return a nullabledecimal. This in turn causesMaxto return a nullabledecimal. So now, when this aggregate query evaluates tonull,maxPricegets set tonull– no more error.

The rest of his article discusses issues when combining queries in order to use aggregate functions across relationships. Be sure to read every line of the article, it’s worth your time. There’s no sense of summarizing his words, because the topic is a rather complex one. The one thing to point out is that using LINQ requires to know LINQ:

The critical point here is to know what LINQ is doing for you. The ability to follow relationships between tables using simple property syntax in C# can simplify some code considerably. With appropriately-formed queries, LINQ to SQL’s smart transformation from C# to SQL will generate efficient queries when you use these relationships. But if you’re not aware of what the LINQ operations you’re using do, it’s easy to cause big performance problems.

As always, a sound understanding of your tools is essential.

There’s nothing left to say.

Contract-first vs. Code-first Web Services Development

29.08.07 | Comments (2)

Dare Obasanjo is commenting on an InfoQ article entitled “Code First” Web Services Reconsidered. He states that the question shouldn’t be about whether you should prefer contract-first or code-first:

The only real consideration when deciding between “code first” and “contract first” approaches is whether your service is concerned primarily with objects or whether it is concerned primarily with XML documents [preferrably with a predefined schema]. If you are just moving around data objects (i.e. your data model isn’t much more complex than JSON) then you are better off using a “code first” approach especially since most SOAP toolkits can handle the basic constructs that would result from generating WSDLs from such types. On the other hand, if you are transmitting documents that have a predefined schema (e.g. XBRL or OOXML documents) then you are better off authoring the WSDL by hand than trying to jump through hoops to get the XML<->object mapping technology in your off-the-shelf SOAP toolkit to do a great job with these schemas.

According to Dare the main problem lies in the impedance mismatch between W3C XML Schema (XSD) and objects from your typical OO system. Every SOAP Web Service publishes a service contract, which described the document types based on XML Schema. The problem with XML Schema is that it offers a much more complex type system than any known OO language. Thus interoperability issues cannot be avoided when putting XML (De)Serialization to use, because simple types might be mapped correctly, but complex types often pose problems for the serialization engine.

Dare suggests to revert to RESTful Web Services, which omit the problem by simply doing without XML Schema based contracts:

One of the interesting consequences of the adoption of RESTful Web services is that these interoperability issues have been avoided because most people who provide these services do not provide schemas in the W3C XML Schema. […] That way XML geeks like me get to party on the raw XML which is in a simple and straightforward format while the folks who are scared of XML can party on objects using their favorite programming language and platform. The best of both worlds.

Although I have once rightly been put on the SOAP side of the Tilkov Scale, I’m starting to switch sides - for better or for worse …

Dependency Injection - Good or Bad Practice?

29.08.07 | TrackBack (0)

Jacob Proffitt shares his musings about Dependency Injection on his blog. He gives an example of a data access pattern, which might be implemented by directly managing the data access provider or by injecting the provider or the connection via Dependency Injection. He conclude that

The benefit to this pattern is that the class is now disconnected from the data provider. The disadvantage is that now my calling code has to handle the provider.

In his opinion Dependency Injection has mainly been “hyped” by Unit Testing frameworks using mock objects:

The real reason that DI has become so popular lately, however, has nothing to do with orthogonality, encapsulation, or other “purely” architectural concerns. The real reason that so many developers are using DI is to facilitate Unit Testing using mock objects.

There are several mock object libraries making use of dependency injection, e.g. NMock and Rhino.Mocks. Jacob refers to TypeMock as an example of a “Superior .Net Mocking” solution, which “claims to allow you to mock objects used by your classes without having to expose those internal objects at all”. He closes by saying:

And why am I still hearing about the virtues of a pattern whose sole perceptible benefit is allowing mock objects in Unit Tests?

Nate Kohari disagrees and starts a rather lengthy discussion in the comments to Jacob’s post. He says that

The real benefits of DI appear when you use a framework (sometimes called an “inversion of control container”) to support it. When you request an instance of a type, a DI framework can build an entire object graph, wiring up dependencies as it goes.

He refers to two examples of IoC containers, namely Castle Windsor and his own framework Ninject. Jacob isn’t convinced and responds:

How can you say that dependency injection […] creates loosely coupled units that can be reused easily when the whole point of DI is to require the caller to provide the callee’s needs? That’s an increase in coupling by any reasonable assessment.

Nate responds to this argument on his blog by defending Dependency Injection:

This is why dependency injection frameworks like Ninject, Castle Windsor, and StructureMap exist: they fix this coupling problem by washing your code clean of the dependency resolution logic. In addition, they provide a deterministic point, in code or a mapping file, that describes how the types in your code are wired together.

He also responds to Jacob’s argument that the Factory Pattern already solves all the problem DI claims to address:

Factory patterns are great for small implementations, but like dependency-injection-by-hand it can get extremely cumbersome in larger projects. Abstract Factories are unwieldy at best, and relying on a bunch of static Factory Methods (to steal a phrase from Bob Lee) gives your code “static cling” — static methods are the ultimate in concreteness, and make it vastly more difficult to alter your code.

He continues in the (absolutely worth to read) comments of his post:

Now, let’s consider the DI vs. provider model argument. The real benefit of DI over a provider model (abstract factory) is the ability to wire up multiple levels of the object graph at once. With an abstract factory, you can get different implementations for a specific dependency. However, wiring the dependencies of the dependencies (and so on) is not part of the equation, unless you have a bunch of abstract factories.

I generally have to agree with Nate. But I would suggest to use dependency injection sparingly. You don’t have to (or you mustn’t) describe every dependency between types in a central mapping file. Although your dependencies are then described in one place and may be changed at deployment, the remaining code gets very generic and difficult to understand (the inner workings, which are injected at runtime, are missing). Some dependencies are implementation details and should remain within your code. Others are on an “upper” level and good candidates for DI.